Recent research has explored the utilization of pre-trained text-image discriminative models, such as CLIP, to tackle the challenges associated with open-vocabulary semantic segmentation. However, it is worth noting that the alignment process based on contrastive learning employed by these models may unintentionally result in the loss of crucial localization information and object completeness, which are essential for achieving accurate semantic segmentation.

More recently, there has been an emerging interest in extending the application of diffusion models beyond text-to-image generation tasks, particularly in the domain of semantic segmentation. These approaches utilize diffusion models either for generating annotated data or for extracting features to facilitate semantic segmentation. This typically involves training segmentation models by generating a considerable amount of synthetic data or incorporating additional mask annotations. To this end, we uncover the potential of generative text-to-image conditional diffusion models as highly efficient open-vocabulary semantic segmenters, and introduce a novel training-free approach named DiffSegmenter.

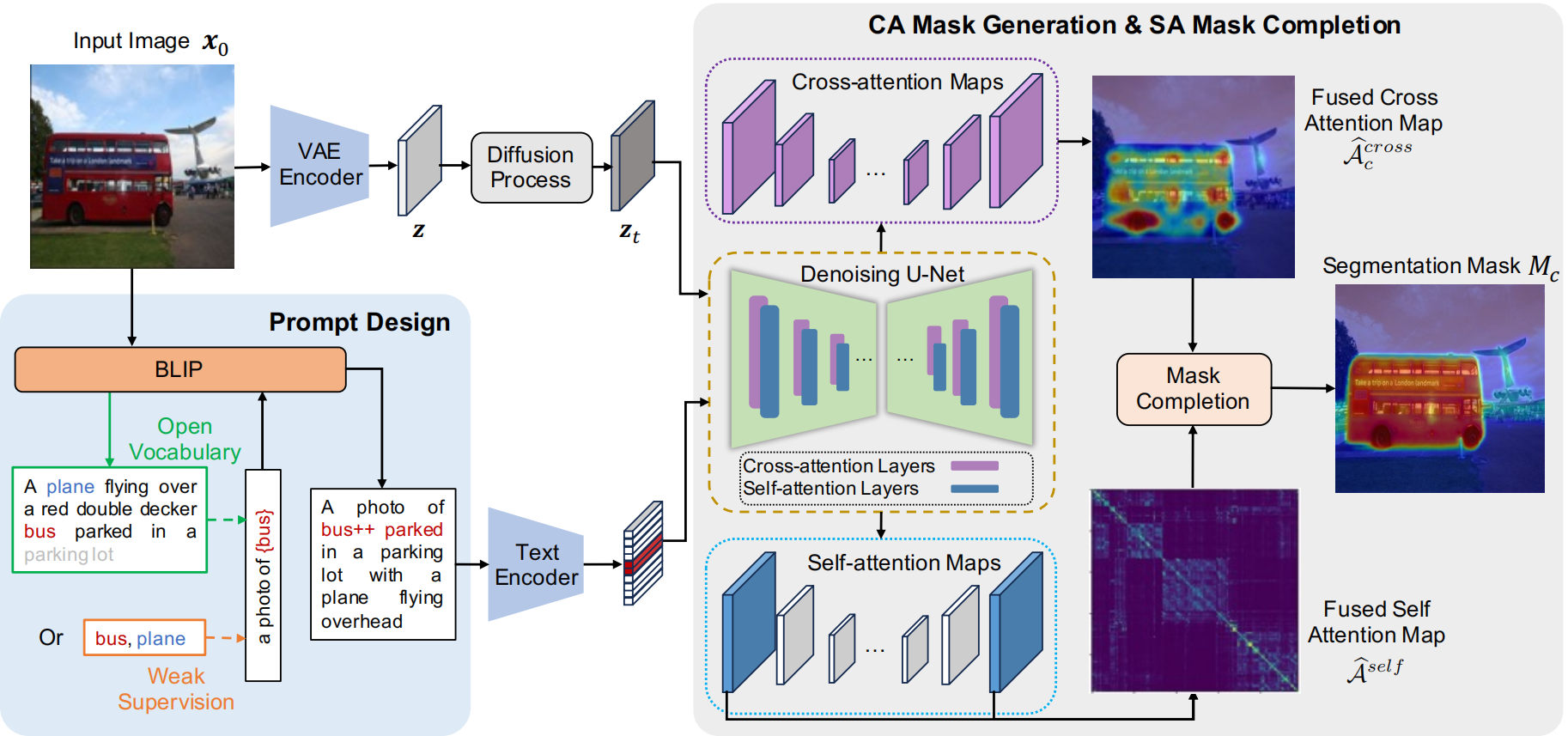

Specifically, by feeding an input image and candidate classes into an off-theshelf pre-trained conditional latent diffusion model, the crossattention maps produced by the denoising U-Net are directly used as segmentation score maps, which are further refined and completed by the followed self-attention maps. Additionally, we carefully design effective textual prompts and a category filtering mechanism to further enhance the segmentation results. Extensive experiments on three benchmark datasets show that the proposed DiffSegmenter achieves impressive results for open-vocabulary semantic segmentation.

An input image and enhanced candidate class tokens by the BLIP-based prompt design module are fed into an off-the-shelf pre-trained conditional latent diffusion model. The fused cross-attention maps produced by the denoising U-Net are treated as the initial segmentation score maps, which is further refined and completed by the fused self-attention maps of the U-Net. Note that the parameters of all the involved models are frozen without any tuning.

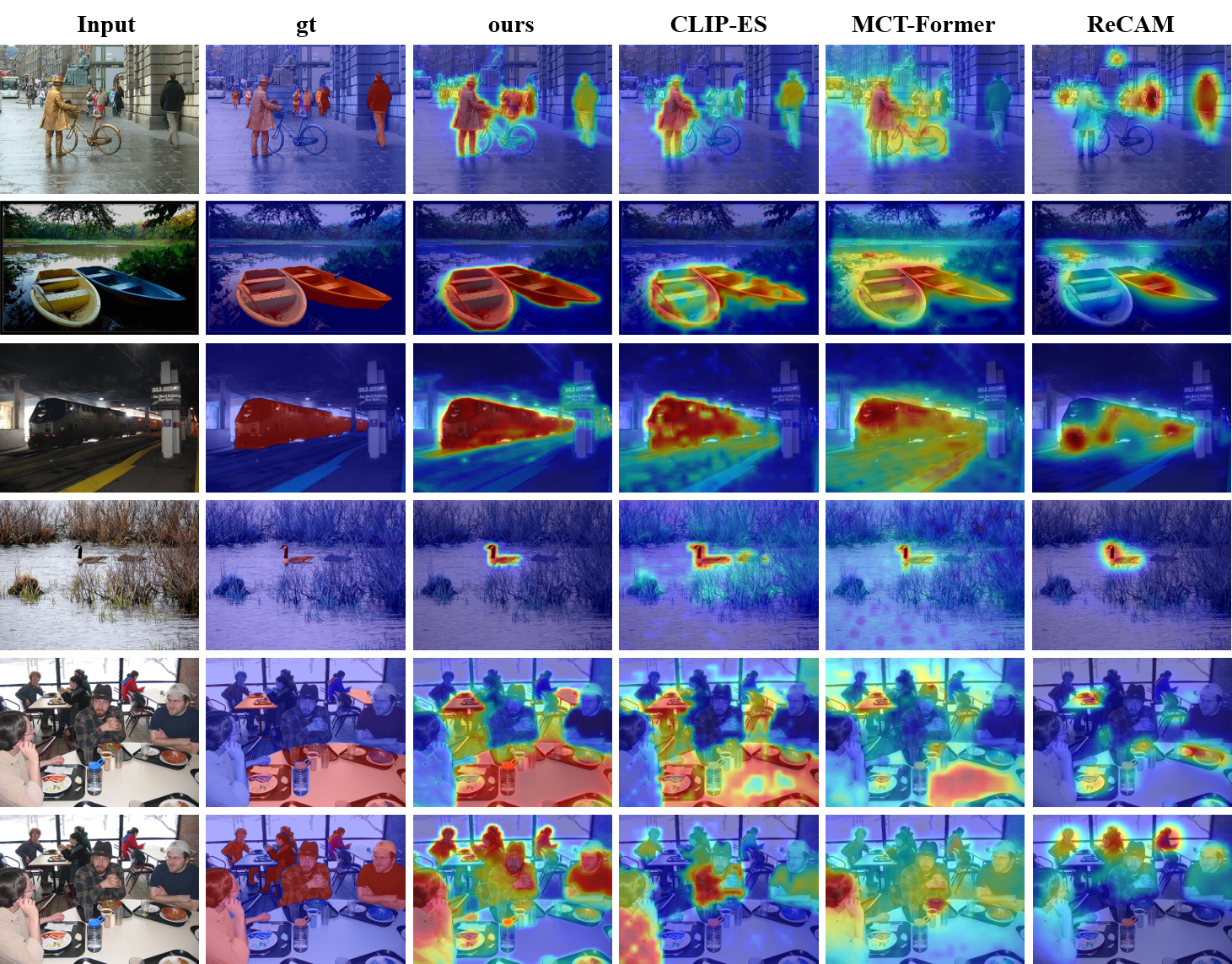

Here are some more segmentation results for open-vocabulary and weakly-supervised semantic segmentation. We can see that our proposed DiffSegmenter can achieve better segmentation results than previous methods.

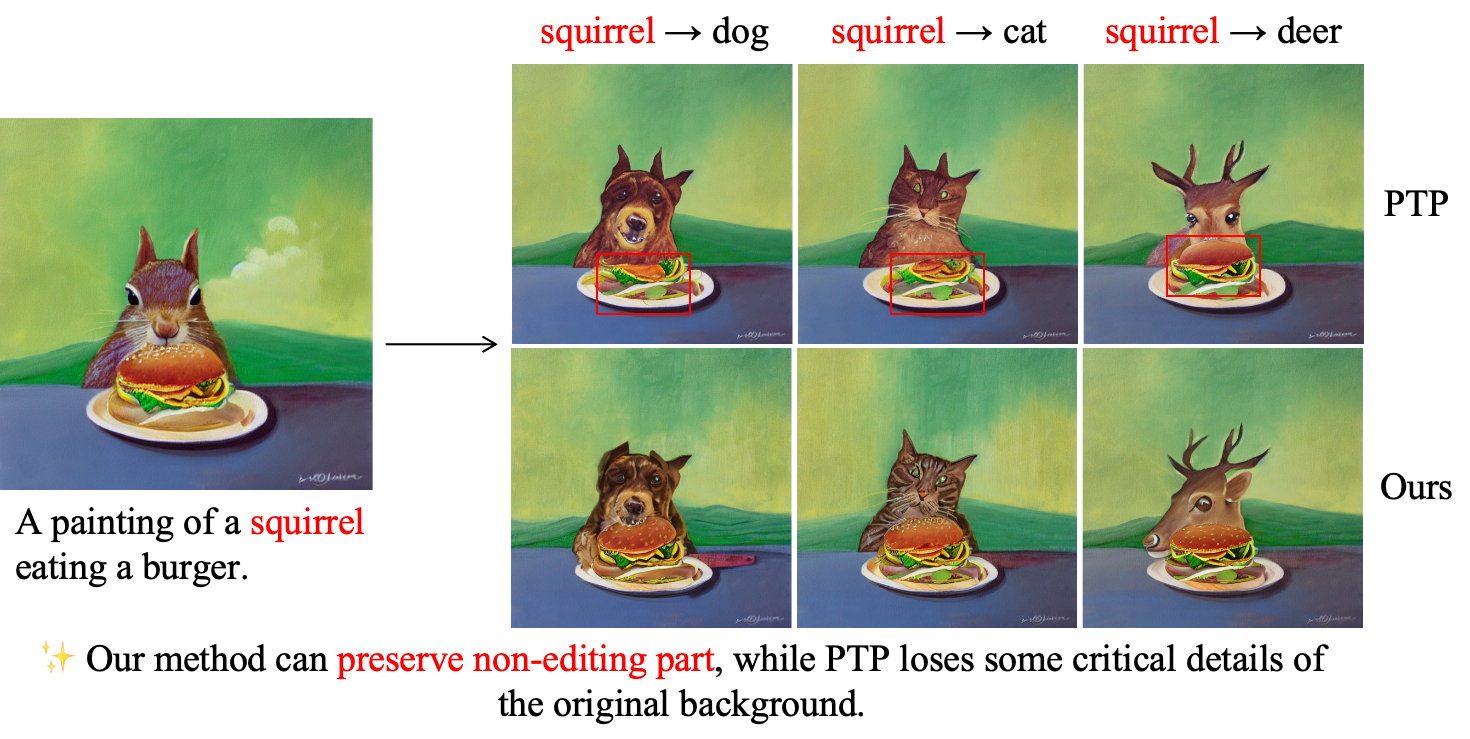

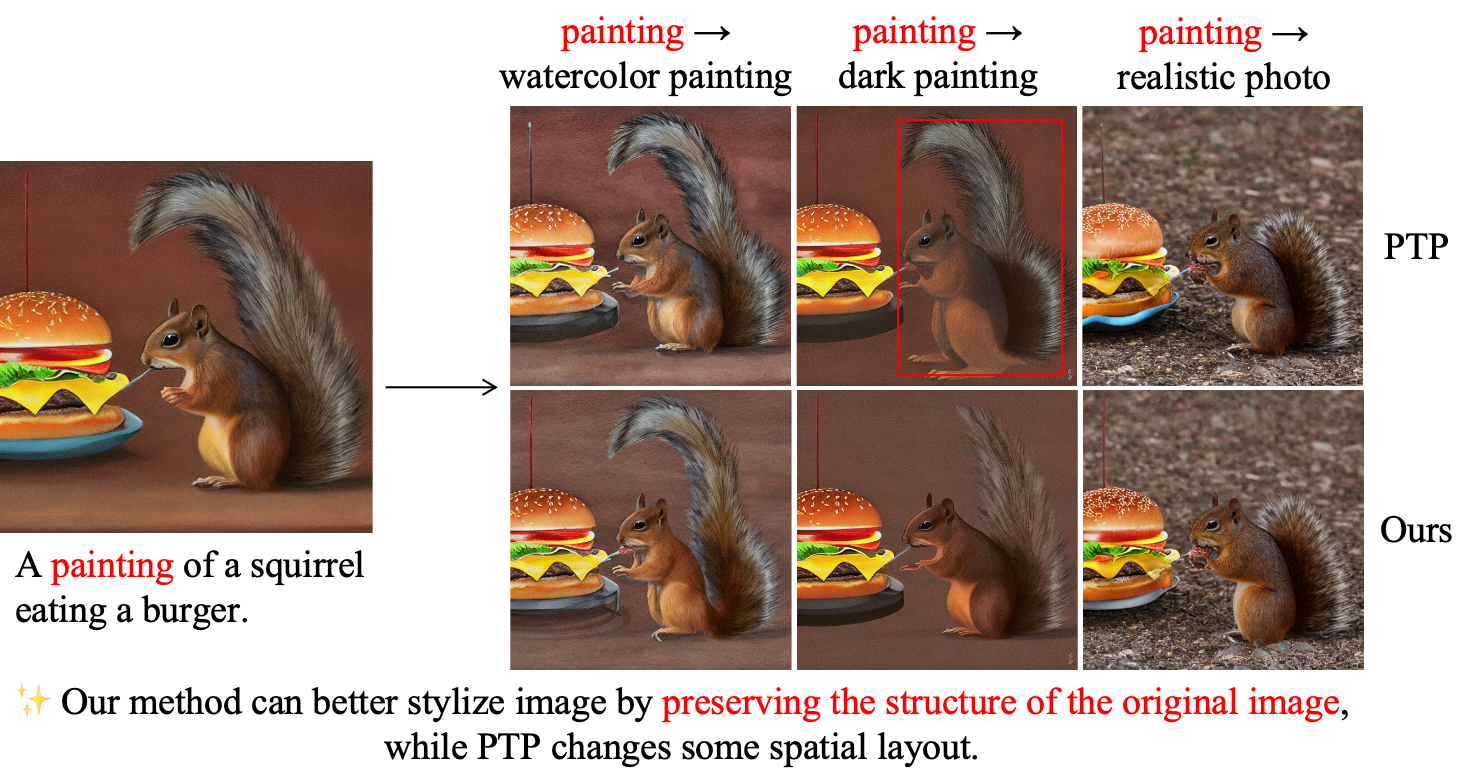

Diffusion-based image editing methods(Prompt-to-Prompt etc.) highly rely on the quality of object segmentation masks. Here we show that our proposed DiffSegmenter can improve the quality of such masks, and thus improve the image editing results.

@article{wang2023diffusion,

title={Diffusion model is secretly a training-free open vocabulary semantic segmenter},

author={Wang, Jinglong and Li, Xiawei and Zhang, Jing and Xu, Qingyuan and Zhou, Qin and Yu, Qian and Sheng, Lu and Xu, Dong},

journal={arXiv preprint arXiv:2309.02773},

year={2023}

}